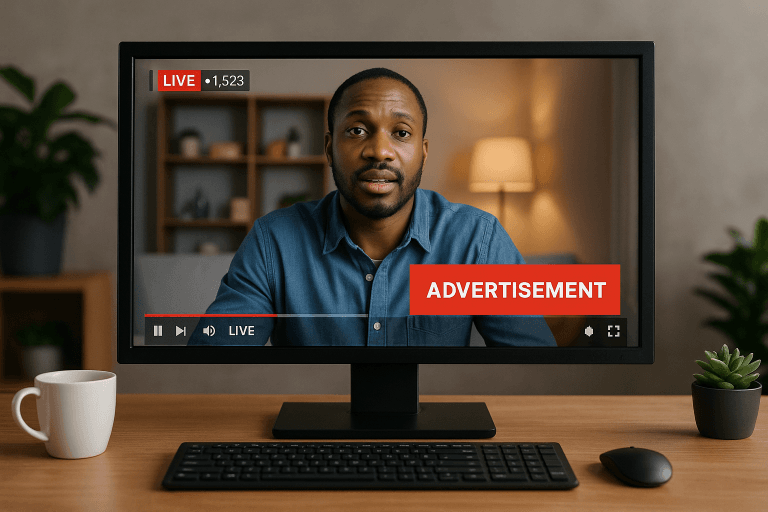

Many Wowza deployments fail for reasons unrelated to Wowza itself. The software is blamed for buffering, dropped streams, or instability, when the real issue lies beneath the operating system. Server sizing, hardware selection, and realistic capacity planning define whether a Wowza installation survives real-world usage or collapses under load.

Why Wowza performance starts with hardware

Wowza does not hide infrastructure complexity. Unlike fully abstracted cloud platforms, it exposes performance limits clearly. This is a strength, not a weakness. It allows operators to understand exactly where bottlenecks occur.

A Wowza server handles:

- Persistent incoming connections

- Continuous disk reads for playlists or DVR

- Memory buffering for stream stability

- Network output to thousands of viewers or CDNs

Each of these consumes different resources. Treating Wowza like a generic web application almost guarantees poor results.

CPU considerations: cores matter more than clock speed

Streaming workloads are highly parallel. Each stream, rendition, or protocol conversion adds processing threads. A server with fewer high-frequency cores often performs worse than a server with more moderate cores.

General guidelines:

- Live pass-through requires minimal CPU

- Transcoding increases CPU consumption exponentially

- Multiple renditions multiply load quickly

Capacity planning should assume peak usage, not average usage. A Wowza server running comfortably at 40% CPU during tests may hit saturation during real events.

Memory usage and stream buffering

RAM is frequently underestimated in Wowza environments. Memory buffers smooth network jitter and protect against ingest instability. Insufficient RAM leads to cascading failures that look like network issues.

Common mistakes include:

- Deploying Wowza on minimal-memory VPS instances

- Running OS-level services that compete for memory

- Ignoring Java heap tuning

Well-sized servers allocate memory generously, ensuring the streaming engine never fights the operating system for resources.

Disk I/O and its hidden impact

Live streaming is not disk-intensive, until it is. Playlists, catch-up TV, DVR, and on-demand fallback all depend on disk performance. Slow storage introduces latency and stuttering even when CPU and bandwidth appear healthy.

SSD or NVMe storage is strongly preferred. Traditional HDDs introduce unpredictable seek times that break continuous playback. For TV-style workloads, disk performance becomes a core reliability factor.

Network throughput and real bandwidth math

Bandwidth is often marketed incorrectly. A “1 Gbps port” does not guarantee sustained throughput. Shared uplinks, oversubscription, and routing policies all affect real capacity.

Operators should calculate:

- Average bitrate per stream

- Peak concurrent viewers

- Protocol overhead

- Redundancy margins

A server delivering 500 viewers at 3 Mbps requires far more than 1.5 Gbps of theoretical capacity once overhead and spikes are included.

Providers specializing in streaming hardware often design around these realities. For example, RTMP Server focuses on bandwidth-first server planning rather than generic hosting assumptions.

Dedicated servers vs virtualized environments

Virtual machines are convenient but risky for sustained streaming. Resource contention, noisy neighbors, and unpredictable throttling undermine long-running workloads.

Dedicated servers provide:

- Guaranteed CPU cycles

- Predictable disk performance

- Stable network paths

For production workloads, dedicated environments remain the preferred choice. This is especially true when scaling beyond experimental or internal use.

Scaling strategies: vertical vs horizontal

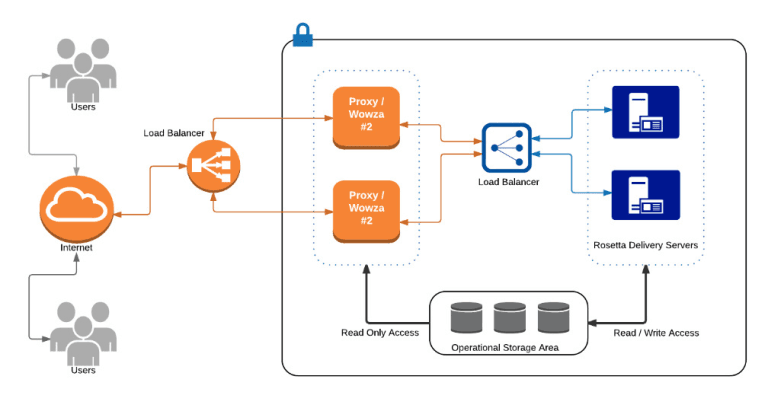

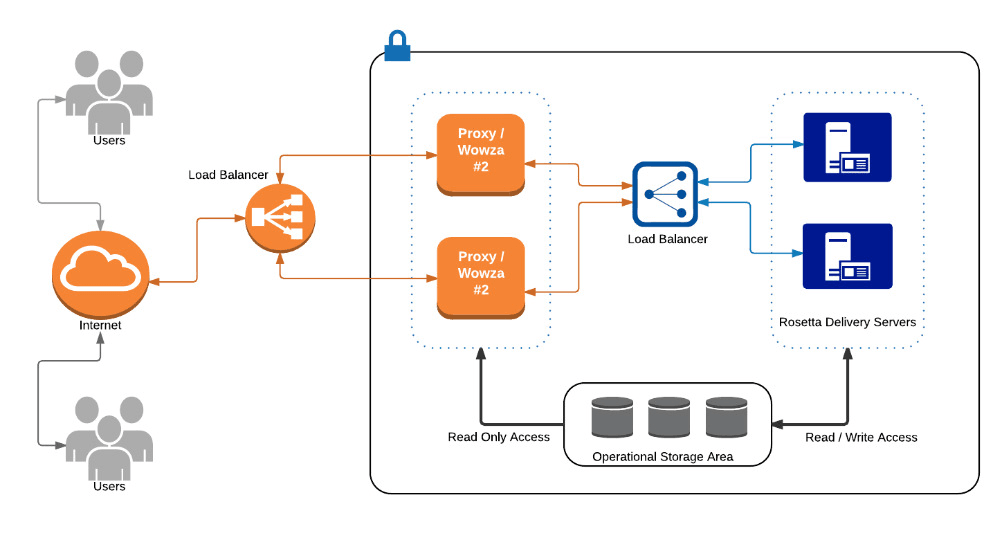

Scaling Wowza is not only about adding servers. It is about deciding how and where to scale.

Vertical scaling increases server size. Horizontal scaling adds servers and distributes load. Each has trade-offs.

| Strategy | Advantages | Limitations |

|---|---|---|

| Vertical scaling | Simplicity | Hard limits |

| Horizontal scaling | Resilience | Operational complexity |

| CDN offloading | Viewer scale | Less control |

Many successful deployments combine all three. Wowza’s predictable behavior simplifies this hybrid approach.

Monitoring and proactive capacity management

Monitoring is part of server sizing. Without visibility, capacity planning becomes guesswork. Key metrics include:

- CPU load per stream

- Memory utilization trends

- Network saturation

- Stream error rates

Providers offering managed Wowza environments often include proactive monitoring to detect issues before viewers notice. Hosting Marketers emphasizes this operational layer as part of its Wowza server offerings:

https://hosting-marketers.com/wowza-streaming/

Cost efficiency through correct sizing

Overprovisioning wastes money. Underprovisioning damages reputation. Correct sizing balances both.

A well-sized server:

- Runs at moderate utilization during peaks

- Has headroom for failures

- Avoids emergency scaling

This balance is what separates experimental streaming from professional operations.

Independent technical references

For neutral background on Wowza’s architecture and deployment considerations, Wikipedia offers a high-level technical overview suitable for contextual understanding.

For practical demonstrations of server sizing, performance tuning, and scaling discussions, the official Wowza YouTube channel provides detailed walkthroughs and engineering-focused content:

https://www.youtube.com/@WowzaMedia

Internal link closing the cluster loop

This article focuses on hardware and capacity. A complementary infrastructure-level perspective is explored on wowza-hosting.com, which examines why Wowza continues to serve as a foundational streaming engine across professional environments.

Final perspective

Wowza does not fail silently. When servers are undersized, it exposes the problem clearly. This honesty is precisely why it remains trusted in professional streaming environments. Correct server sizing transforms Wowza from “just another media server” into a stable foundation capable of supporting serious, long-term streaming operations.